Collaborative Beats with ARKit

Written by Matthew Ward, Sr Developer

Boom Kat

During some exploration time in early 2019, my colleague Mike Hobizal and I wanted to learn ARKit. We wanted to try building an augmented reality, modular drum kit app. We were curious to see if we could print out pieces of paper labelled with different parts of a drum kit, arrange them in the real world, scan them with a phone, and tap on them to make drum beats. Theoretically, we could print out different drum pieces and create a customized modular kit which sounded pretty rad. So we dove in.

A quick (and hastily written) primer on ARkit

ARKit is Apple’s framework that gives you quick and easy access to the wonderful world of augmented reality. It uses a device’s camera and motion sensors to track surfaces, recognize images and place virtual objects on real surfaces.

In order for image detection to work, we had to set up a world-tracking AR session. Since we wanted some visual feedback when the app successfully recognized a drum pad, we used world tracking and surface detection to add an overlay on top of the pad that would animate when detected.

Mapping The World

To get started with ARKit, we first needed to run an ARSession. An ARSession handles the complicated image processing and motion handling for creating an augmented world. A session needs a configuration to define the motion and scene tracking behaviors and, luckily, ARKit gives us one called ARWorldTrackingConfiguration that affords us an easy way to detect surfaces.

Next, we needed to show the camera feed by creating an ARSCNView. This is a coordinator that blends the camera’s view with any 3D objects we create. It ensures the physical and virtual world move together to give the illusion of augmented reality. Running ARSCNView’s ARSession property with our ARWorldTrackingConfiguration made us official AR developers. (For a deeper dive on plane detection, check out this tutorial from Apple.)

Image Detection & You

Image detection using ARKit is simple and straightforward. First, create an AR Resources group in your app’s asset catalog. Then add images, name them, and tell Xcode the images’ physical dimensions. Putting in the physical width and height lets ARKit know how to place the image on a detected surface at the correct distance away from the camera. If we printed out a drum pad on a postcard instead of a 8.5x11 piece of paper and placed it on the floor, ARKit would think that the image was deep beneath the floor instead of laying flat against the surface.

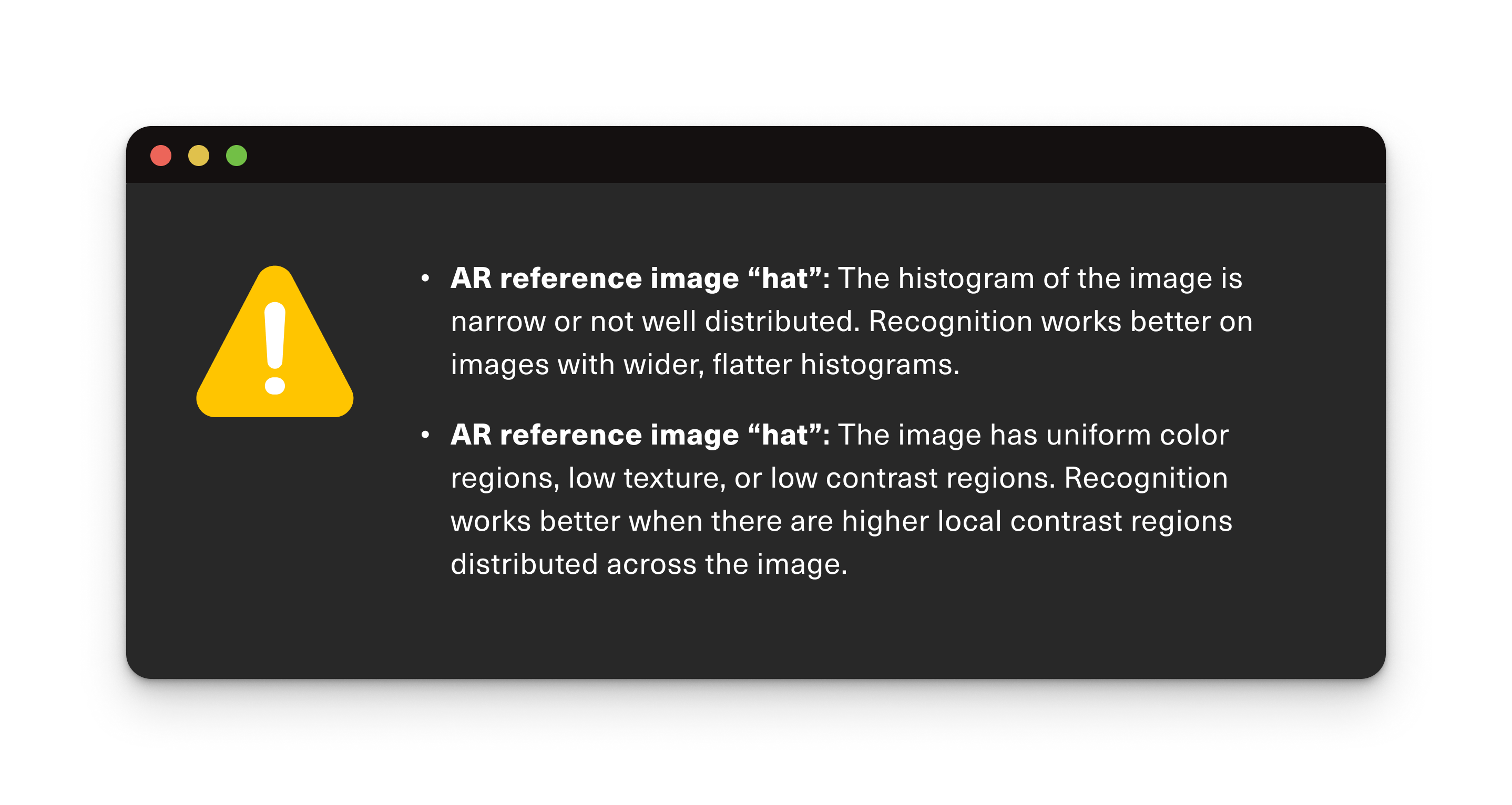

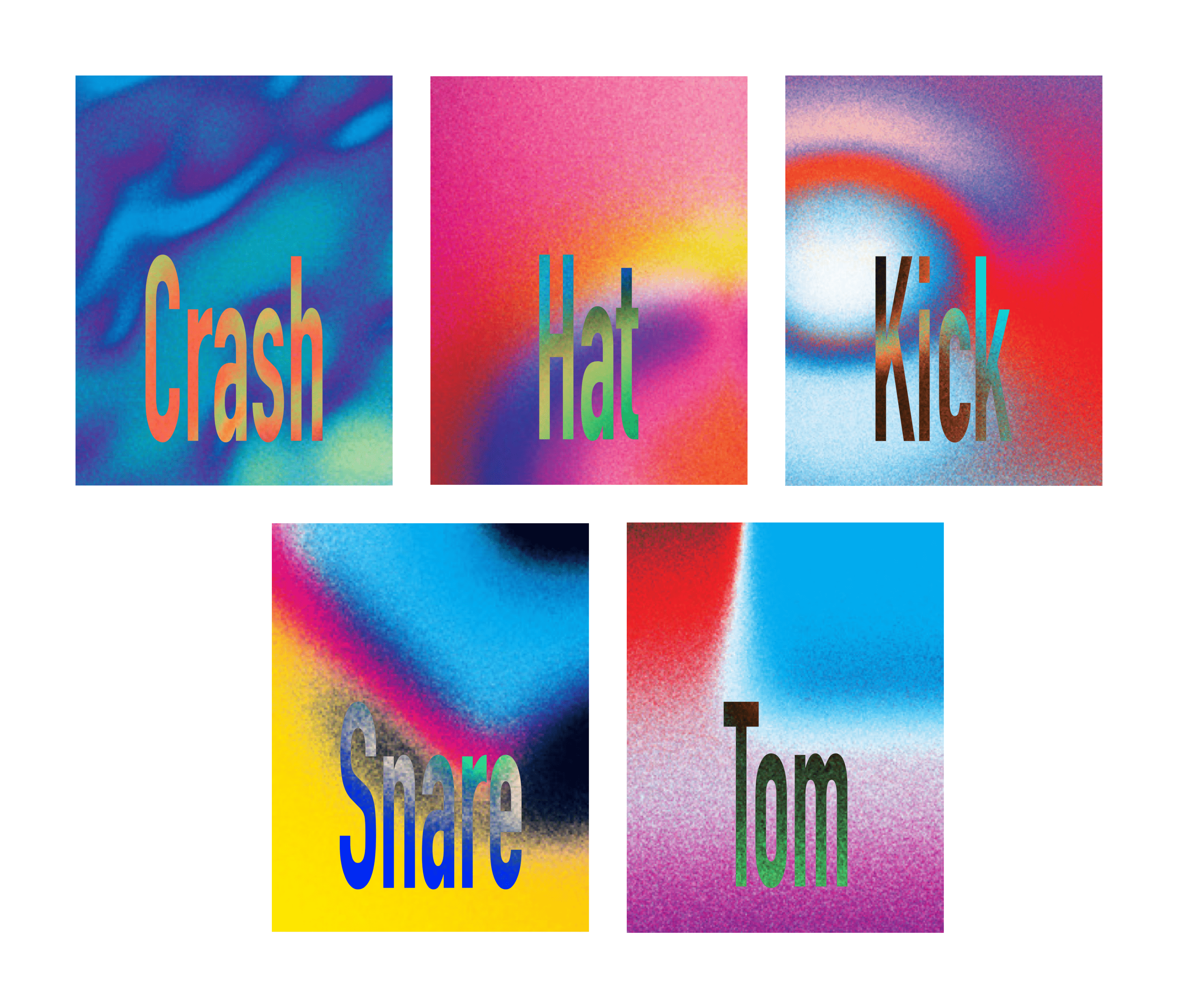

To help ARKit recognize images, they need to have high contrast and be visually distinct. Our initial designs for the pads were fairly minimalistic, only using type to label a page as “Hat”, “Snare” or “Kick”. Xcode surfaced a warning that the images might be hard to detect.

Ultimately, the images were recognized more easily after we amped up the contrast by adding background patterns.

After the initial drum pads were created, we loaded them into our project. We had to add the images to the detectionImages property of our ARWorldTrackingConfiguration.

func startTracking() {

guard let referenceImages = ARReferenceImage.referenceImages(inGroupNamed: "AR Resources", bundle: nil) else {

fatalError("Missing expected asset catalog resources.")

}

let configuration = ARWorldTrackingConfiguration()

configuration.detectionImages = referenceImages

sceneView.session..run(configuration, options: [.resetTracking, .removeExistingAnchors])

}Running this session gives us a notification when it detects one of our images.

To give the user a confirmation that the image was scanned correctly, we placed a white 2D plane the same size and location as the detected image and added a pulse animation.

Make Some Noise

Now that we could detect our images it was time to play the associated drum sound. To do this we had to override touchesBegan, run a hit test on our scene view, find the node that was tapped, and play the sample. We figured out that we could attach the sample player to the actual drum pad to take advantage of the physical space so it acted like a real drum kit. The sounds surround you and get louder or quieter depending on your proximity from the drum pad.

override func touchesBegan(_ touches: Set, with event: UIEvent?) {

for touch in touches {

let location = touch.location(in: view)

let sceneHits = sceneView.hitTest(location, options: nil)

if let sceneHit = sceneHits.first {

play(sceneHit.node)

}

}

}

func play(_ node: SCNNode) {

if let source = SCNAudioSource(fileNamed: "\(node.name ?? "").wav") {

let play = SCNAction.playAudio(source, waitForCompletion: false)

node.runAction(play)

}

} Jam Session

At this stage we had a project that was fun to play with. However, we soon discovered that using this project to play with others was even more fun. After separate experiments with peer-to-peer connectivity in an AR space, we wanted to see if we could add multiple players.

Apple has a Multipeer Connectivity framework

to connect and communicate with nearby iOS devices. One user acts as the host by starting a session and advertising it to nearby devices. The other users can join the session which allows the devices to connect and start communicating.

When a user chose to be a host in our app, we created a MCSession and passed in our device name as our unique peer ID. This peer ID displays when another user tries to join to ensure they are connecting with the right session. The next step was to advertise that the MCSession existed. A MCNearbyServiceAdvertiser takes in the peer ID and a serviceType string. This acts as an identifier to distinguish our app from any other apps that use Multipeer Connectivity.

let advertiser = MCNearbyServiceAdvertiser(peer: myPeerID, discoveryInfo: nil, serviceType: "ARBEATS")

advertiser.delegate = self

advertiser.startAdvertisingPeer()When a user tries to connect, we receive a call to a delegate method and can choose to authorize that user to connect. Once connected, it was time to jam.

func advertiser(_ advertiser: MCNearbyServiceAdvertiser,

didReceiveInvitationFromPeer peerID: MCPeerID,

withContext context: Data?,

invitationHandler: @escaping (Bool, MCSession?) -> Void) {

invitationHandler(true, self.session)

}Sending The Message

There are a couple of ways to send data to a connected peer, you can send individual packs of data or open a byte stream. We tried both approaches and chose a byte stream because there was less latency. The Multipeer Connectivity framework opens a byte stream with a peer. Once open, we could send messages whenever we wanted. We created a struct containing the name of the drum sample. Once a drum pad was hit the data was sent to the peers, causing the sample to play on their devices.

// Sending the data

func sendSound(_ node: SCNNode) {

guard let soundName = node.name else { return }

let soundDescription = SoundDescriptor(name: soundName)

if let data = try? JSONEncoder().encode(soundDescription) {

let _ = data.withUnsafeBytes { networkSession.outputStream?.write($0, maxLength: data.count) }

}

}

// Receiving the data

func stream(_ aStream: Stream, handle eventCode: Stream.Event) {

switch (eventCode) {

case .hasBytesAvailable:

if let input = aStream as? InputStream {

var buffer = [UInt8](repeating: 0, count: 32)

let numberBytes = input.read(&buffer, maxLength: 32)

let data = Data(bytes: &buffer, count: numberBytes)

if let soundDescription = try? JSONDecoder().decode(SoundDescriptor.self, from: data) {

playSoundWithName(soundDescription.name)

}

}

default:

break

}

}Now that multiple people were playing together, we added chord and single note pads to make jamming more fun.

After getting everything working, it was fun for everyone to jam together. The biggest challenge we had was latency. Even with a byte stream it was rare that the sample would play at the same time on both devices. The latency was inconsistent so it made laying down a simple beat pretty difficult. A potential solution to latency would be to spin up a multiplayer game server to handle concurrent events but we didn’t have the resources to take it further.

Overall, it was amazing to be able to turn this app idea into reality. Apple gives developers straightforward and easy to understand tools to accomplish otherwise difficult tasks. In a few lines of code you can create an AR session and detect surfaces and images. With a few more lines of code and you can connect and communicate with another device. Getting a project like this from idea to reality didn’t take long. Apple has added even more to ARKit since we worked on this project. Being creative with technology is the best part of our job, and we hope you’re inspired to dive in and start creating too.